Automation and Orchestration of Microservices

Almost any size entity relies on computing resources. Be they just a single smartphone, or as much as a data center of super computers. At some point (usually at even a single smartphone), how those resources become, are, and remain useful in the variable world in which we live, begins to get difficult. From there, ever closer to impossible.

Monolithic solutions have power and very well defined concise administrative interfaces; however, quickly show impracticality. Both software and hardware change in the face of ongoing needed applications. And needed applications themselves change! Monolithic solutions simply can't steer to keep up, and can even become more of a problem than they were meant to solve.

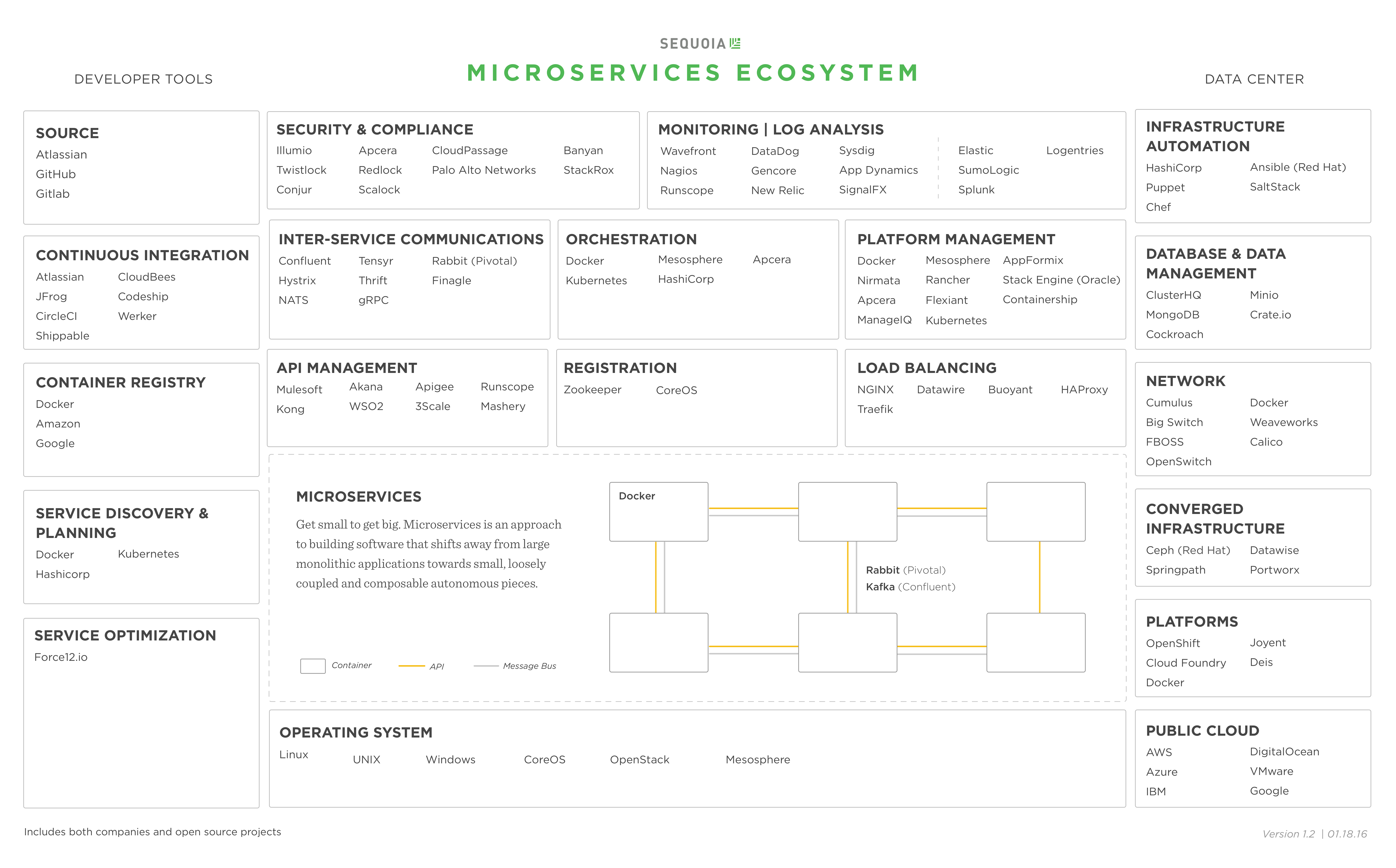

The natural direction of how computing resource utilization needed to go to be fully and painlessly realized, was down in scale. Hence, the advent of "Microservices". Smaller, more well defined, specific ways of managing computing resources solved the problems posed by the monolith. Microservices can also be combined in many different ways to create even newer solutions unimagined initially by their creators and the technologist they serve, and thus easily keep up with changing software, hardware, and application needs. Docker, for instance, takes a single host computing resource and abstracts it into a set of smaller host computing resources called containers. Each instance of which, with a specific task and solution within a context for an overall application of those computing resources.

Microservices

You're probably wondering, and rightly so - If mircoservices are more numerous, wouldn't managing more, just mean more management? Not necessarily. Two classes of technology, automation and orchestration, play a crucial role in data center operation in that they provide a remote abstraction of data centers as a single operating system's command line interface and scheduler respectively.

Automation

Automation is the process of scripting installation of operating systems and applications, configuring servers, configuring how software communicates across hosts, and more. The same configuration can be applied to a single or thousands of hosts with customized logical group abstractions (e.g. geo-located, organizational, purpose etc.) and executed as roles. A bit like using software defined data center frameworks that allow one to design their own remote data center operating system command line interface.

One such tool is called Ansible. Written in Python, Ansible has an advantage over most in its class in that it doesn't require an agent on remote hosts. Simply having Python installed on each host is enough. And what computer doesn't have Python installed these days? For a simple example and as explained before, the self-defined logical groupings could be based on location. Below, the global group would include hosts in the tokyo group, and in the singapore group. The command for each is simply the standard Linux command to check memory on hosts in the specified groups.

ansible tokyo -command -a 'free -h' -u someuser --ask-pass

ansible singapore -command -a 'free -h' -u someuser --ask-pass

ansible global -command -a 'free -h' -u someuser --ask-pass

Ansible has its own modules that abstract stateful administration of hosts. There's a rich architecture of variables, tasks, handlers, plays, playbooks, and roles administrators can use to do just about anything. And there's even an online community called Galaxy to share all these.

Orchestration

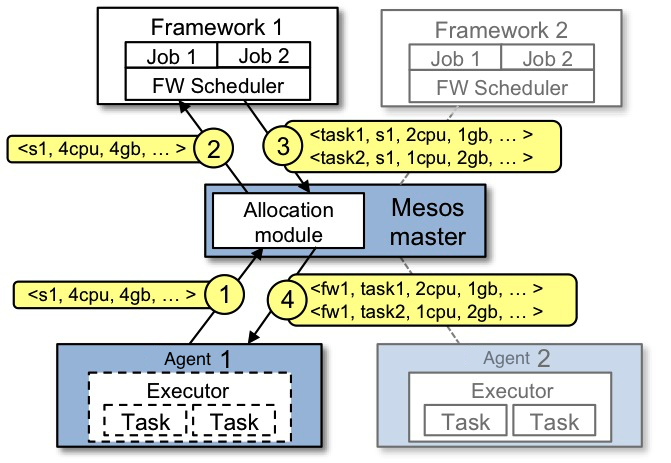

Orchestration is the act of assigning action to others to achieve an overall result. In the case of computing, and as the name suggests, it is to assign resources (i.e. storage, CPU, RAM) across host computers to achieve an application.

Where automation is an abstraction of a single command line interface across many hosts, orchestration is a single abstraction for computing resources of a VERY important part of an operating system's kernel, called the scheduler. The scheduler decides what requesting processes get what computing resources, how much, when, and for how long. In case the importance of this has not yet ye impressed, start thinking of a scheduler as the God inside your computer. No, you're not the God of your computer. The scheduler is, and decides on the worthiness of your prayers.

A well received orchestrator, that itself spawned another very well received in-memory database system (Spark), is an Apache project called Mesos. Mesos provides an interface for scheduler frameworks to simply plugin and receive resource offerings across many hosts. Greater control can be configured to ensure specific resources to specific requests, but the idea is that frameworks generally don't need to care as it would be distributed across resource offerings automatically anyway. Many frameworks for Mesos have already been developed (Spark, Chronos, Marathon, Cassandra, etc.) and more can be expected. If one doesn't currently exist for needs, it can be developed against the Mesos API. Chronos is apparently less than 1000 lines of code. It's that easy.

Please continue to follow Microscopium for more articles on Microservices, along with many other subjects in technology research and development.